Name Conformance Filter¶

The foglamp-filter-name-conformance filter validates the asset name and datapoint names of reading flowing through a pipeline. The filter enforces the names used for assets and datapoints to ensure they either match a configured pattern or are in a set of configured names. In this way the filter enforces a unified namespace on the assets and datapoints within the pipeline.

If any of the checks fails then the asset is considered to have failed validation and a user defined action will be instigated. A number of options are available when readings fail the consistency checks defined:

Discard - The reading is removed from the data pipeline

Label - A new datapoint is added to the reading to label the reading as having failed conformance tests

Rename - The asset name of the reading is changed.

Filter Configuration¶

The configuration options supported by the name-conformance filter are

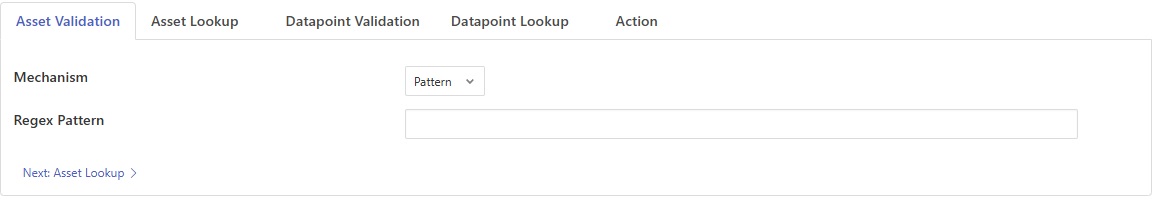

Asset Validation¶

Asset validation defines the validation rules for asset names that flow through the pipeline.

|

Mechanism: The mechanism to use for asset name validation. Supported options are

Pattern: Validate the asset name against a regular expression pattern.

Lookup: Validate the asset name against a lookup list of asset names.

None: No validation is performed on the asset name.

Regex Pattern: Regular expression pattern for asset name. Only valid if the Pattern mechanism is chosen.

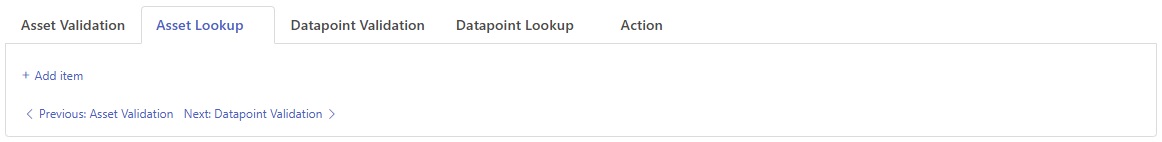

Asset Lookup¶

Asset lookup is to add items for a lookup list of asset name. This is applicable only if the mechanism for asset validation is set to “Lookup”.

|

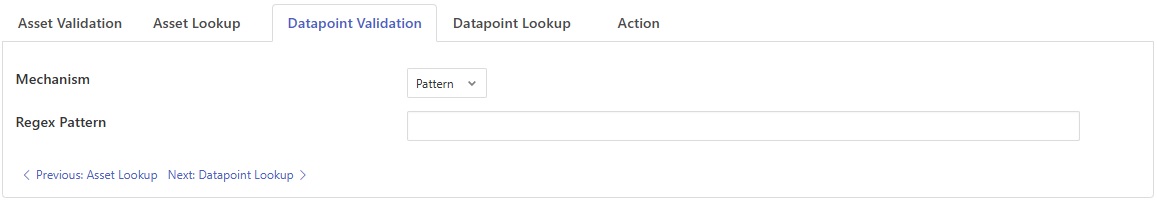

Datapoint Validation¶

Datapoint validation defines the validation rules for datapoint names that flow through the pipeline.

|

Mechanism: The mechanism to use for datapoint name validation. Supported options are

Pattern: Validate the datapoint name against a regular expression pattern.

Lookup: Validate the datapoint name against a lookup list of datapoint names.

None: No validation is performed on the datapoint name.

Regex Pattern: Regular expression pattern for asset name. Only valid if the Pattern mechanism is chosen.

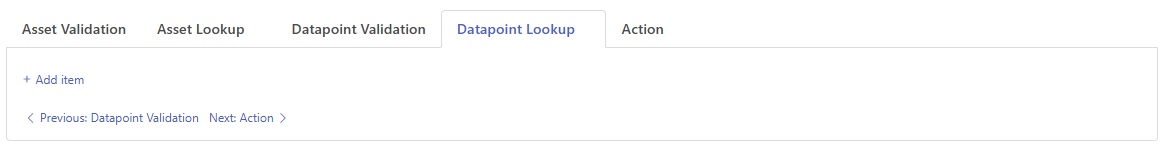

Datapoint Lookup¶

Datapoint lokkup is to add items for a lookup list of datapoint name. This is applicable only if the mechanism for datapoint validation is set to “Lookup”.

|

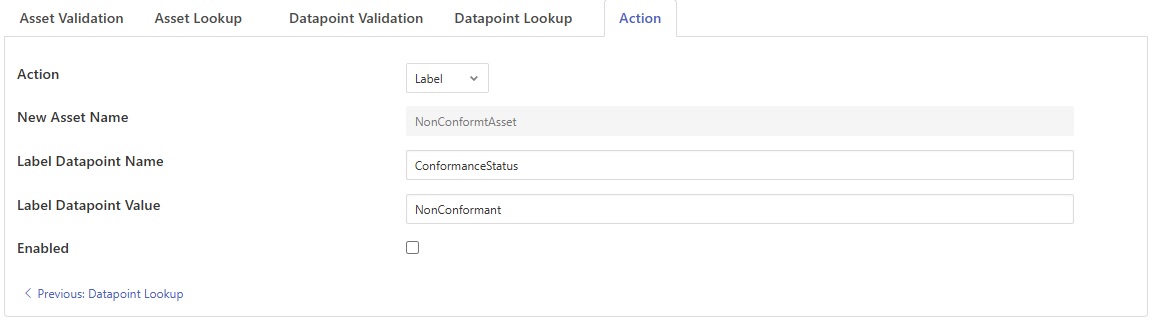

Action¶

Action defines the actions to be taken when asset or datapoint names fail validation.

|

Action: An action to be taken if validation fails. Supported options are

Rename: Change the asset name of readings that have failed validation.

Label: Label readings that have failed validation by adding a datapoint to the asset in the reading.

Discard: Remove readings that have failed validation from the pipeline.

New Asset Name: The asset name to be used if validation failed. This is applicable only for the action “Rename”.

Label Datapoint Name: The name of the datapoint to label in a reading. This is applicable only for the action “Label”.

Label Datapoint Value: The value of the label datapoint in a reading. This is applicable only for the action “Label”.

Enabled: A toggle to enable or disable the filter. When enabled, the filter will validate asset and datapoint names according to the specified rules.

Regular Expressions¶

The filter supports the standard Linux regular expression syntax for the asset name and datapoint name.

Expression |

Description |

|---|---|

. |

Matches any character |

[] |

Matches any of the characters enclosed in the brackets |

[a-z] |

Matches any characters in the range between the two given |

* |

Matches zero or more occurrences of the previous item |

+ |

Matches one or more occurrence of the previous item |

? |

Matches zero or one occurrence of the previous item |

{i, j} |

Matches between i and j occurrences of the previous item. Where i and j are integers. |

^ |

Matches the start of the string |

$ |

Matches the end of the string |

See Also¶

foglamp-filter-asset-conformance - A plugin for performing basic sanity checking on the data flowing in the pipeline.

foglamp-filter-md5 - FogLAMP md5 filter computes a MD5 hash of a reading’s JSON representation and stores the hash as a new datapoint within the reading.

foglamp-filter-md5verify - FogLAMP MD5 Verify plugin verifies the integrity of readings by computing a new MD5 hash and comparing it against the stored MD5 datapoint.

foglamp-filter-partition - Partition filter plugin for splitting string datapoints into multiple new datapoint.

foglamp-filter-sha2 - FogLAMP sha2 filter computes a SHA-2 hash of a reading’s JSON representation and stores the hash as a new datapoint within the reading.

foglamp-filter-sha2verify - FogLAMP SHA2 Verify plugin verifies the integrity of readings by computing a new SHA-2 hash and comparing it against the stored SHA-2 datapoint.