Sigma Data Cleansing Filter¶

The foglamp-filter-sigmacleanse filter is designed to cleanse data in a stream by removing outliers from the data stream or adding a label to them to facilitate further processing upstream. The method used to remove or label these outliers is to build an average and standard deviation for the data over time and remove any data that differs by more than a certain factor of the standard deviation from that average.

The plugin is designed to be used in situations when a sensor or item of equipment produces occasional anomalous results, these Will be removed from the data passed onward within the system to provide a cleaner data stream. Care should be taken however that these values that are removed do represent sensor anomalies and are not the result of problems with the condition that is being monitored. If a sensor produces a high percentage of anomalous results then it should be considered for replacement.

In order to monitor the anomalous rates the plugin can optionally produce an hourly statistics report that will show the number of readings that have been forwarded as good, labeled as possible anomalies and the number that have been discarded.

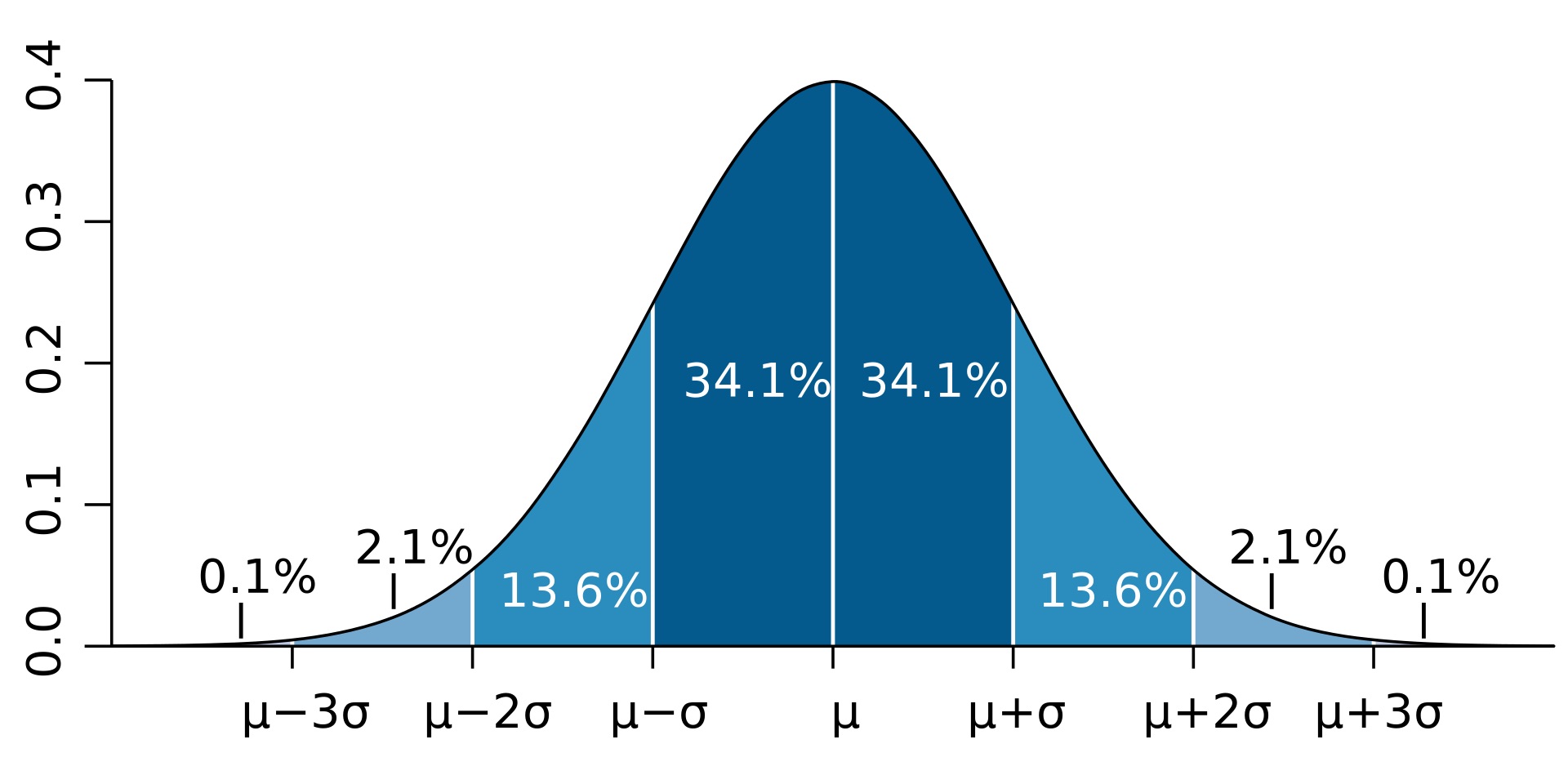

The method used to determine if a value is anomalous is based on the premise that data from a given sensor will follow a normal distribution from the mean value that is sampled over time. The probability of a value being valid reduces as the value differs more greatly from the mean value. This gives rise to the classical bell shaped distribution of values as shown below.

The filter saves the state on shutdown and reloads it on startup so that it knows if it should start rejecting data or continue to determine the normal when restarted. It also saves the sigma map which contains the normalization statistics for each datapoint on the shutdown and reloads it on startup.

|

It can be seen from the diagram above how the probability drops as the values moves away from the mean, the sigma values here are the standard deviations observed for good data samples. Outlier values that are discarded do not contribute to the calculation of the standard deviation.

To add a sigma cleansing filter to your service:

Click on the Applications add icon for your service or task.

Select the sigmacleanse plugin from the list of available plugins.

Name your cleansing filter.

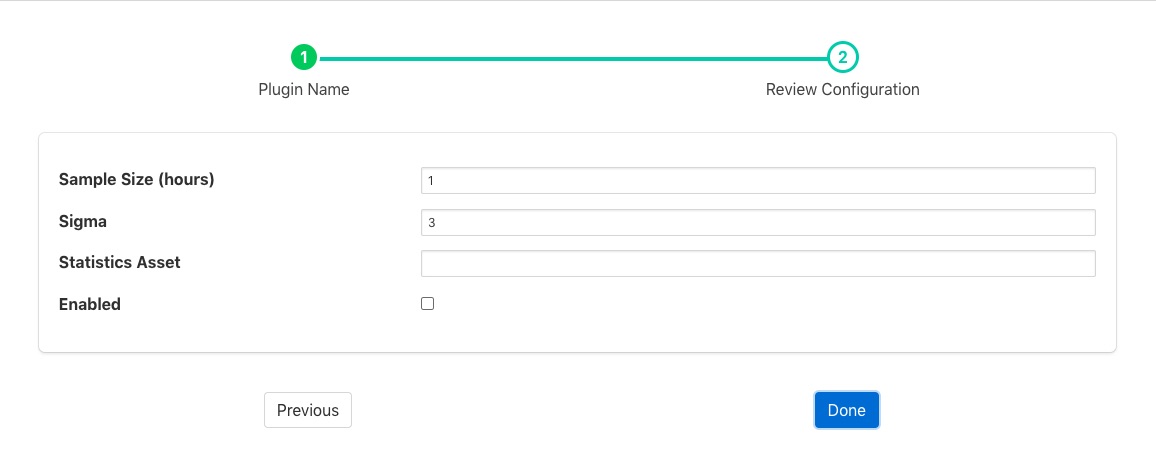

Click Next and you will be presented with the following configuration page

Configure your Sigma for the sigma cleanse filter

Sigma: The factor to apply to the standard deviation, the default is 3. Any value that differs from the mean by more than 3 * sigma will be removed.

Statistics Asset: If this is not empty a statistics asset will be added every hour that details the number of readings that have been forwarded by the filter and the number removed. The name is that asset matches the value added here.

Enable: Enable or disable the filter.

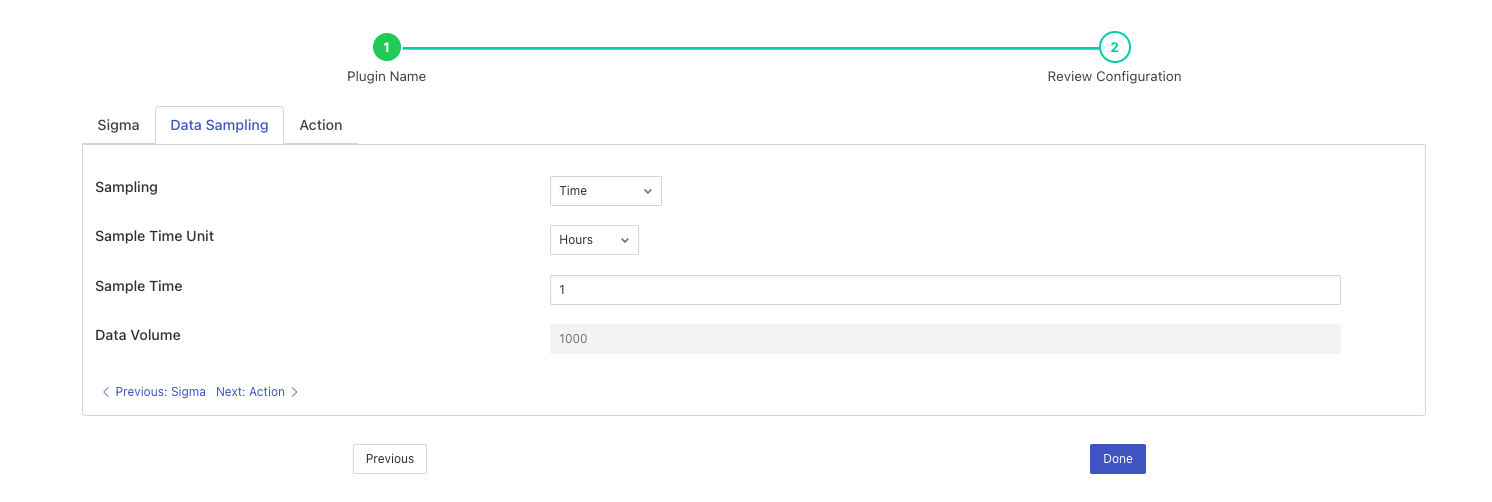

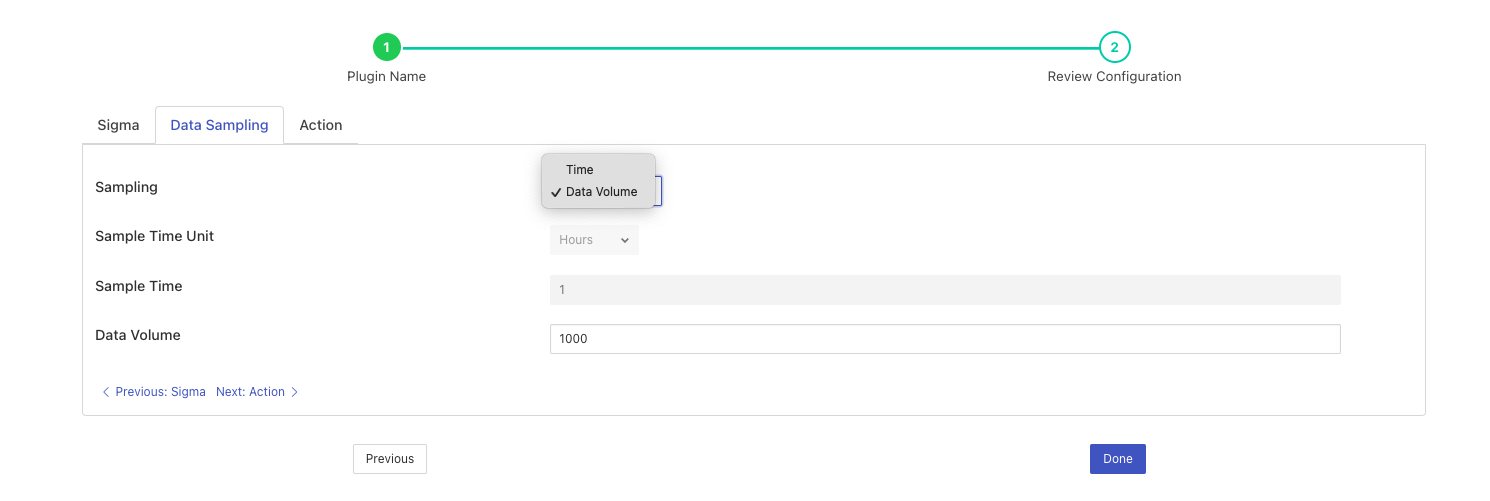

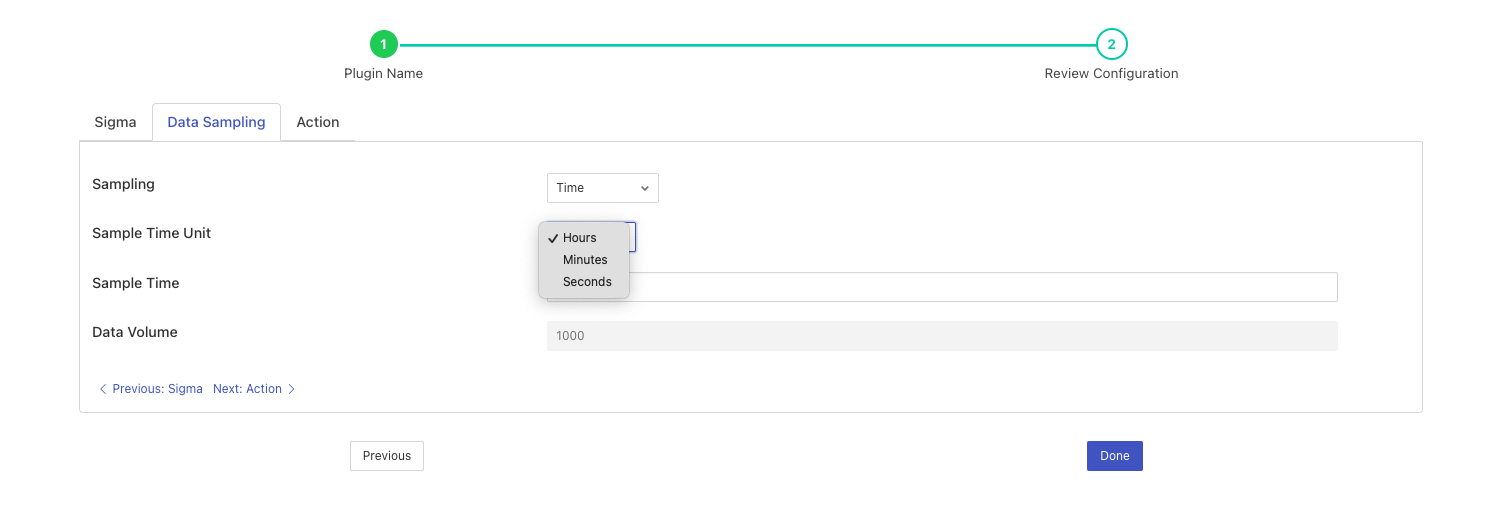

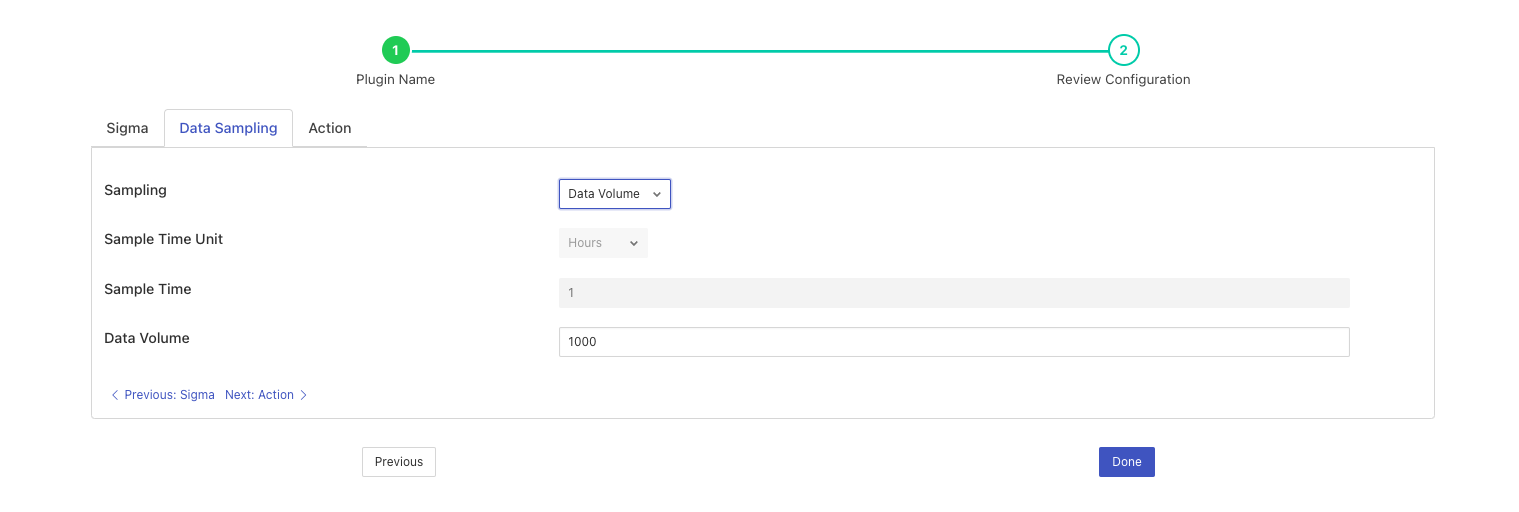

Click Data Sampling to configure the Data Sampling for the filter

Sampling: This option allows you to choose between Time and Data Volume as the sampling method for the filter. If Time is selected, you can configure the Sample Size and Sample Size Unit (Hours, Minutes, or Seconds). If Data Volume is selected, you can configure the Data Volume (number of readings).

Sample Time Unit: This option allows you to choose the unit for the Sample Time configuration, with options of Hours, Minutes, and Seconds.

Sample Time: The number of hours, minutes or seconds over which an initial mean and standard deviation is built before any cleansing commences.

Data Volume: This option is only available if the Sampling method is set to Data Volume. It allows you to configure the number of data readings to use for the sigma cleansing calculation, instead of a time-based window.

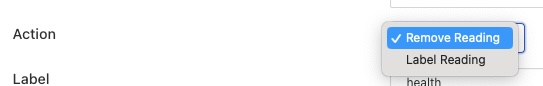

Click Action tab to configure the action to take when a reading is detected as a possible anomaly.

Action: The action to take if the reading being processed is detected as a possible anomaly.

The reading can either be labeled, by adding a new datapoint to the reading, or it may be removed from the data stream.

Label: The name of the data point to be used when labeling the reading as a possible anomaly.

Enable your filter and click Done

See Also¶

foglamp-filter-amber - A FogLAMP filter to pass data to the Boon Logic Nano clustering engine

foglamp-filter-asset - A FogLAMP processing filter that is used to block or allow certain assets to pass onwards in the data stream

foglamp-filter-asset-conformance - A plugin for performing basic sanity checking on the data flowing in the pipeline.

foglamp-filter-asset-validation - A plugin for performing basic sanity checking on the data flowing in the pipeline.

foglamp-filter-batch-label - A filter to attach batch labels to the data. Batch numbers are updated based on conditions seen in the data stream.

foglamp-filter-change - A FogLAMP processing filter plugin that only forwards data that changes by more than a configurable amount

foglamp-filter-conditional-labeling - Attach labels to the reading data based on a set of expressions matched against the data stream.

foglamp-filter-consolidation - A Filter plugin that consolidates readings from multiple assets into a single reading

foglamp-filter-delta - A FogLAMP processing filter plugin that removes duplicates from the stream of data and only forwards new values that differ from previous values by more than a given tolerance

foglamp-filter-ednahint - A hint filter for controlling how data is written using the eDNA north plugin to AVEVA’s eDNA historian

foglamp-filter-enumeration - A filter to map between symbolic names and numeric values in a datapoint.

foglamp-filter-expression - A FogLAMP processing filter plugin that applies a user define formula to the data as it passes through the filter

foglamp-filter-fft - A FogLAMP processing filter plugin that calculates a Fast Fourier Transform across sensor data

foglamp-filter-inventory - A plugin that can inventory the data that flows through a FogLAMP pipeline.

foglamp-filter-md5 - FogLAMP md5 filter computes a MD5 hash of a reading’s JSON representation and stores the hash as a new datapoint within the reading.

foglamp-filter-metadata - A FogLAMP processing filter plugin that adds metadata to the readings in the data stream

foglamp-filter-normalise - Normalise the timestamps of all readings that pass through the filter. This allows data collected at different rate or with skewed timestamps to be directly compared.

foglamp-filter-omfhint - A filter plugin that allows data to be added to assets that will provide extra information to the OMF north plugin.

foglamp-filter-partition - Partition filter plugin for splitting string datapoints into multiple new datapoint.

foglamp-filter-rate - A FogLAMP processing filter plugin that sends reduced rate data until an expression triggers sending full rate data

foglamp-filter-rms - A FogLAMP processing filter plugin that calculates RMS value for sensor data

foglamp-filter-scale - A FogLAMP processing filter plugin that applies an offset and scale factor to the data

foglamp-filter-scale-set - A FogLAMP processing filter plugin that applies a set of sale factors to the data

foglamp-filter-sha2 - FogLAMP sha2 filter computes a SHA-2 hash of a reading’s JSON representation and stores the hash as a new datapoint within the reading.

foglamp-filter-statistics - Generic statistics filter for FogLAMP data that supports the generation of mean, mode, median, minimum, maximum, standard deviation and variance.