Databricks SQL¶

The foglamp-north-databricks-sql plugin sends data from FogLAMP to Databricks using the ODBC Driver. This plugin facilitates the transfer of data from FogLAMP’s buffer to Databricks, ensuring seamless integration with Databricks’s cloud-based data warehouse.

|

Configuration Details¶

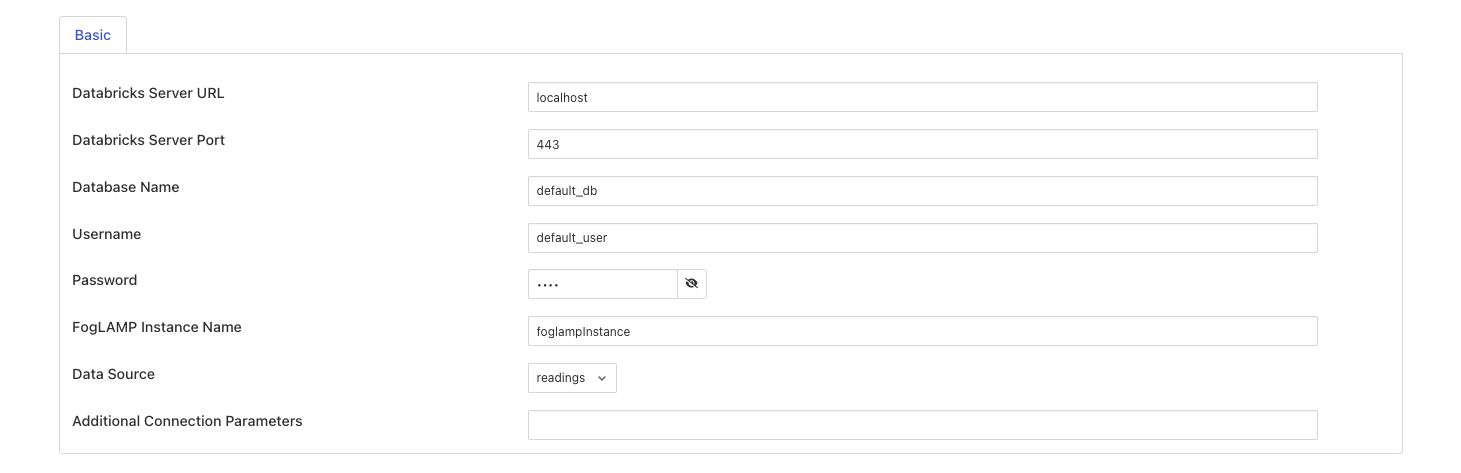

The plugin requires the following configuration parameters:

Databricks Server URL: The Databricks server URL (e.g., xyz.cloud.databricks.com).

Databricks Server Port: The Databricks server port (default: 443).

Schema: The Databricks schema name where the data will be stored.

Username: The username for connecting to the Databricks account.

Password: The password for authenticating the Databricks account.

FogLAMP Instance Name: FogLAMP instance name.

Data Source: The source of FogLAMP data to be sent to Databricks (e.g., readings).

|

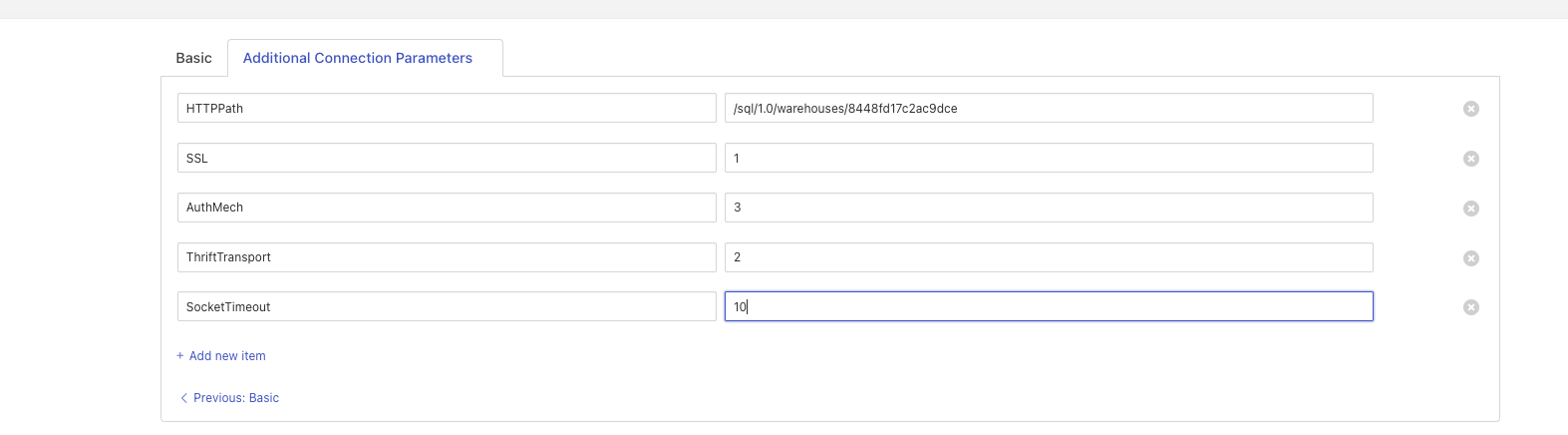

Additional Connection Parameters: Any additional connection parameters required for the Databrics connection (e.g., HTTPPath, AuthMech, etc.).

For example, add the following parameters to the Additional Connection Parameters field for token-based authentication: HTTPPath=your_http_path, AuthMech=3, SSL=1, ThriftTransport=2

To override the default socket timeout and set it to 10 seconds, add the following parameter: SocketTimeout=10

Refer Databricks documentation for more information on the additional connection parameters.

The plugin will create a table in Databricks if it does not already exist. The table structure is dynamically determined based on the data provided by FogLAMP.

Hints¶

A filter plugin foglamp-filter-databricks-hints allows hints to be added to the readings. It will affect how the data is stored within/mapped with the Databricks SQL. Currently it supports only one hint that is table name. A new datapoint Databrics-Hint hint will be added into existing reading.

If foglamp-filter-databricks-hints filter is used with foglamp-north-databricks-sql then a table will be created as per the table name provided in the hints

Special Characters Handling in SQL Statements¶

The plugin ensures proper handling of special characters in SQL statements by escaping them appropriately using backticks. This approach prevents special characters from interfering with SQL syntax and maintains data integrity during query execution.

Restrictions on Table and Column Names¶

Certain characters are restricted and cannot be used in table or column names due to SQL limitations or potential conflicts. The following characters are not allowed:

Backslash (\)

Forward slash (/)

Single quote (‘)

Backtick (`)

Colon (:)

Dot (.)

Space ( )